On Thursday I submitted my project proposal for my Part II project. A HTML version of it (generated using hevea and tidy from LaTeX with all styling stripped out) follows. (With regard to the work schedule – I appear to be one week behind already. Oops.)

Part II Computer Science Project Proposal

Models of human sampling and interpolating regular data

D. Thomas, Peterhouse

Originator: Dr A. Rice

Special Resources Required

The use of my own laptop (for development)

The use of the PWF (backup, backup development)

The use of the SRCF (backup, backup development)

The use of zeus (backup)

Project Supervisor: Dr A. Rice

Director of Studies: Dr A. Norman

Project Overseers: Alan Blackwell + Cecilia Mascolo

(AFB/CM)

Introduction

When humans record information they do not usually do so in the same

regular manner that a machine does as the rate at which they sample depends

on factors such as how interested in the data they are and whether they have

developed a habit of collecting the data on a particular schedule. They are

also likely to have other commitments which prevent them recording at precise

half hour intervals for years. In order to be able to test methods of

interpolating from human recorded data to a more regular data stream such as

that which would be created by a machine we need models of how humans collect

data. ReadYourMeter.org contains data collected by humans which can be used

to evaluate these models. Using these models we can then create test data

sets from high resolution machine recorded data sets1 and then try to interpolate back to the

original data set and evaluate how good different machine learning techniques

are at doing this. This could then be extended with pluggable models for

different data sets which could then use the human recorded data set to do

parameter estimation. Interpolating to a higher resolution regular data set

allows for comparison between different data sets for example those collected

by different people or relating to different readings such as gas and

electricity.

Work that has to be done

The project breaks down into the following main sections:-

- Investigating the distribution of recordings in

the ReadYourMeter.org data set.

- Constructing hypotheses of how the human recording

of data can be modelled and evaluating these models against the

ReadYourMeter.org data set.

- Using these models to construct test data sets by

sampling the regular machine recorded data sets2 to produce pseudo-human read test data sets

which can be used to be learnt from as the results can be compared with the

reality of the machine read data sets.

- Using machine learning interpolation techniques to

try and interpolate back to the original data sets from the test data sets

and evaluating success of different methods in achieving this.

- Polynomial fit

- Locally weighted linear regression

- Gaussian process regression (see Chapter 2 of

Gaussian Processes for Machine Learning by Rasmussen &

Williams)

- Neural Networks (possibly using java-fann)

- Hidden Markov Models (possibly using jahmm)

- If time allows then using parameter estimation on

a known model of a system to interpolate from a test data set back to the

original data set and evaluating how well this compares with the machine

learning techniques which have no prior knowledge of the system.

- Writing the Dissertation.

Difficulties to Overcome

The following main learning tasks will have to be undertaken before the

project can be started:

- To find a suitable method for comparing different

sampling patterns to enable hypothesises of human behaviour to be

evaluated.

- Research into existing models for related human

behaviour.

Starting Point

I have a good working knowledge of Java and of queries in SQL.

I have read “Machine Learning” by Tom Mitchell.

Andrew Rice has written some Java code which does some basic linear

interpolation it was written for use in producing a particular paper but

should form a good starting point at least providing ideas on how to go

forwards. It can also be used for requirement sampling.

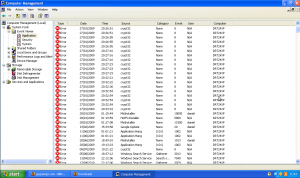

ReadYourMeter.org database

I have worked with the ReadYourMeter.org database before (summer 2009) and

with large data sets of sensor readings (spring 2008).

For the purpose of this project the relevant data can be viewed as a table

with three columns: “meter_id, timestamp, value“.

There are 99 meters with over 30 readings, 39 with over 50, 12 with over 100

and 5 with over 200. This data is to be used for the purpose of constructing

and evaluating models of how humans record data.

Evaluation data sets

There are several data sets to be used for the purpose of training and

evaluating the machine learning interpolation techniques. These are to be

sampled using the model constructed in the first part of the project for how

humans record data. This then allows the data interpolated from this sampled

data to be compared with the actual data which was sampled from.

The data sets are:

- half hourly electricity readings for the WGB from

2001-2010 (131416 records in “timestamp, usage rate”

format).

- monthly gas readings for the WGB from 2002-2010 (71

records in “date, total usage” format)

- half hourly weather data from the DTG weather

station from 1995-2010 (263026 records)

Resources

This project should mainly developed on my laptop which has sufficient

resources to deal with the anticipated workload.

The project will be kept in version control using GIT. The SRCF, PWF and zeus

will be set to clone this and fetch regularly. Simple backups will be taken

at weekly intervals to SRCF/PWF and to an external disk.

Success criterion

- Models of human behaviour in recording data must

be constructed which emulate real behaviour in the ReadYourMeter.org

dataset.

- The machine learning methods must produce better

approximations of the underlying data than linear interpolation and these

different methods should be compared to determine their relative merits on

different data sets.

- The machine once trained should be able apply this

to unseen data of a similar class and produce better results than linear

interpolation.

- A library should be produced which is well

designed and documented to allow users – particularly researchers – to be

able to easily combine various functions on the input data.

- The dissertation should be written.

Work Plan

Planned starting date is 2010-10-15.

Dates in general indicate start dates or deadlines and this is clearly

indicated. Work items should usually be finished before the next one starts

except where indicated (extensions run concurrently with dissertation

writing).

- Monday, October 18

- Start: Investigating the distribution of

recordings in the ReadYourMeter.org data set

- Monday, October 25

- Start: Constructing hypotheses of how the human

recording of data can be modelled and evaluating these models against the

ReadYourMeter.org data set.

This involves examining the distributions and modes of recording found in

the previous section and constructing parametrised models which can

encapsulate this. For example a hypothesis might be that some humans record

data in three phases, first frequently (e.g. several times a day) and then

trailing off irregularly until some more regular but less frequent mode is

entered where data is recorded once a week/month. This would then be

parametrised by the length and frequency in each stage and within that

stage details such as the time of day would probably need to be

characterised by probability distributions which can be calculated from the

ReadYourMeter.org dataset.

- Monday, November 8

- Start: Using these models to construct test data

sets by sampling a regular machine recorded data sets.

- Monday, November 15

-

Start: Using machine learning interpolation techniques to try and

interpolate back to the original data sets from the test data sets and

evaluating success of different methods in achieving this.

- Monday, November 15

- Start: Polynomial fit

- Monday, November 22

- Start: Locally weighted linear

regression

- Monday, November 29

- Start: Gaussian process regression

- Monday, December 13

- Start: Neural Networks

- Monday, December 27

- Start: Hidden Markov Models

- Monday, January 3, 2011

- Start: Introduction chapter

- Monday, January 10, 2011

- Start: Preparation chapter

- Monday, January 17, 2011

- Start: Progress report

- Monday, January 24, 2011

- Start: If time allows then using parameter

estimation on a known model of a system to interpolate from a test data set

back to the original data set. This continues on until 17th

March and can be expanded or shrunk depending on available time.

- Friday, January 28, 2011

- Deadline: Draft progress

report

- Wednesday, February 2,

2011

- Deadline: Final progress report

printed and handed in. By this point the core of the project should be

completed with only extension components and polishing remaining.

- Friday, February 4, 2011,

12:00

- Deadline: Progress Report

Deadline

- Monday, February 7, 2011

- Start: Implementation Chapter

- Monday, February 21, 2011

- Start: Evaluation Chapter

- Monday, March 7, 2011

- Start: Conclusions chapter

- Thursday, March 17, 2011

- Deadline: First Draft of

Dissertation (by this point revision for the exams will be in full swing

limiting time available for the project and time is required between drafts

to allow people to read and comment on it)

- Friday, April 1, 2011

- Deadline: Second draft

dissertation

- Friday, April 22, 2011

- Deadline: Third draft

dissertation

- Friday, May 6, 2011

- Deadline: Final version of

dissertation produced

- Monday, May 16, 2011

- Deadline: Print, bind and

submit dissertation

- Friday, May 20, 2011,

11:00

- Deadline: Dissertation

submission deadline